Understanding Memory Management in Linux

Efficient memory management is crucial for the performance and stability of Linux-based systems. This article explores how Linux manages memory, including key concepts such as pages, frames, virtual memory, paging, the role of MMU, TLB, swap space, Huge Pages, and Transparent Huge Pages (THP).

We’ll break it down into multiple chapters, covering both theoretical and practical aspects with examples.

Chapter 1: Basics of Memory Management

Process Address Space Layout

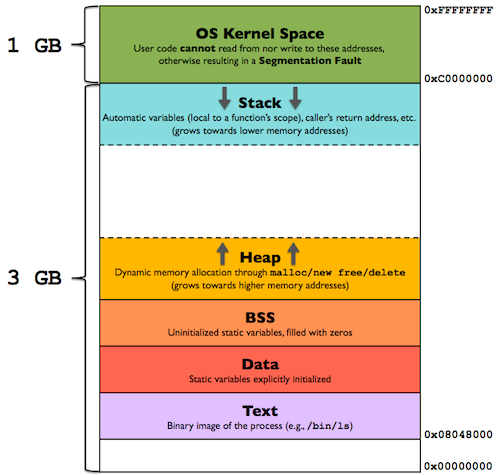

A Linux process has the following memory regions:

Text Segment: Contains executable code.

Data Segment: Stores global/static variables.

Heap: Used for dynamic memory allocation.

Stack: Stores function call frames and local variables.

Memory-Mapped Files and Shared Libraries: Enable efficient memory access.

Kernel Mechanisms for Memory Tracking

Page Tables & Memory Management Unit (MMU): Map virtual to physical memory.

Proc Filesystem (

/proc/[pid]/maps,/proc/[pid]/status): Provide per-process memory stats.Out-of-Memory (OOM) Killer: Terminates processes in low-memory conditions.

1.1 Pages and Frames

Linux manages memory using fixed-size units called "pages". The default page size in Linux is 4KB, though systems can be configured to use larger pages (e.g., Huge Pages).

Page → A fixed-sized unit of virtual memory.

Frame → A fixed-sized unit of physical memory (RAM).

Page Size → Typically 4KB, but can be 2MB or 1GB for Huge Pages.

💡 Example:

If a system has 4GB of RAM, and each page is 4KB, then there are:

If you want to learn more about huge pages, please do refer this article

1.2 Virtual Memory and Paging

Modern systems do not let processes access physical memory directly. Instead, they use virtual memory, which allows:

Each process to have its own private address space (preventing interference).

More memory than physically available by using disk space (swap).

Efficient memory management with paging.

Paging Mechanism

Paging divides both virtual memory (used by programs) and physical memory (RAM) into fixed-size pages and frames. The operating system maps virtual pages to physical frames using a Page Table.

Chapter 2: How Linux Knows How Much Memory a Process Needs

Process memory layout

The memory layout of a program consists of several segments:

Text Segment: Stores executable instructions, typically read-only and shared among processes to prevent modification.

Data Segment: Contains initialized global and static variables; divided into read-only (RoData) and read-write areas.

BSS Segment: Holds uninitialized global and static variables, initialized to zero by the OS(kernel), and is read-write.

Stack: A LIFO structure for function calls, storing local variables and return addresses; grows downward in memory.

Heap: Used for dynamic memory allocation, growing upward; managed by malloc/free or new/delete. Grows upwards.

Checking Memory Usage in CentOS

To see how much virtual and physical memory a process is using, run:cat /proc/<PID>/mapsReplace

<PID>with the process ID. This shows how memory regions are mapped

2.1 Using the size Command

To check how much memory a compiled program requires, use the size command:

size /bin/ls

Example Output

text data bss dec hex

12004 1576 352 13932 366c

Text → Executable code (read-only).

Data → Initialized global/static variables.

BSS → Uninitialized variables (allocated at runtime).

Dec & Hex → Total size in decimal & hexadecimal.

Chapter 3: The Role of the MMU and Page Table

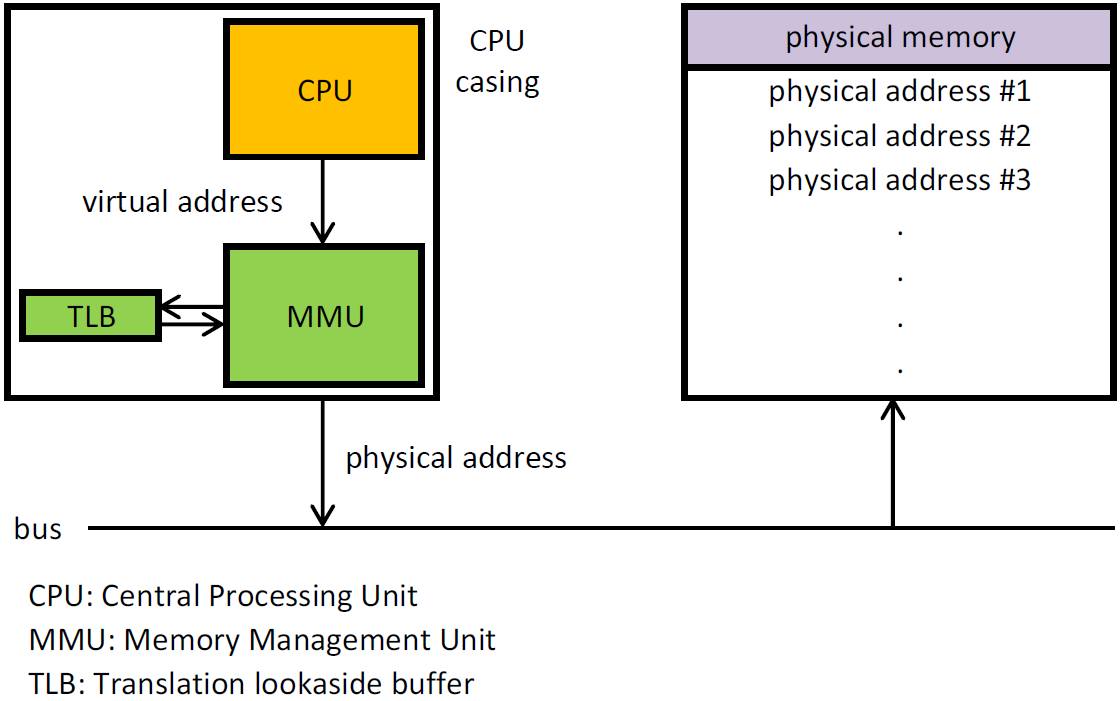

3.1 How CPU Translates Virtual Addresses to Physical Memory

When a program accesses memory, it does not work with physical addresses directly. Instead, the CPU generates a virtual address, which is translated into a physical address by the Memory Management Unit (MMU).

Steps in Address Translation

CPU generates a virtual address → Sent to the MMU.

MMU checks the Page Table (stored in RAM) to find the corresponding physical address.

Page Table Lookup can be slow → To optimize this, Linux uses a TLB (Translation Lookaside Buffer).

Chapter 4: Speeding Up Memory Access with TLB

4.1 What is the Translation Lookaside Buffer (TLB)?

A TLB is a small, high-speed cache inside the CPU that stores recently used virtual-to-physical address mappings.

TLB Lookup Process

CPU requests memory

TLB checks if the page mapping exists:

TLB Hit → The mapping is found, and memory is accessed instantly.

TLB Miss → The MMU must check the Page Table, slowing things down.

Chapter 5: Page Faults and Swap Space

5.1 What Happens When a Page is Not in RAM?

If a program accesses a memory page that is not loaded in RAM, a Page Fault occurs. The system must:

Check if the page exists in Swap Space (disk).

If yes → Load it from Swap back into RAM.

If no → Allocate a new page in RAM.

Too many page faults? Check with:

$ vmstat 1 10Look at the si (swap-in) and so (swap-out) columns. High values mean excessive paging, indicating a memory bottleneck.

How Linux Handles Memory Shortages: Swap Space

When RAM is full, Linux swaps less frequently used memory pages to disk to free up space. However, since disk access is much slower than RAM, excessive swapping can degrade performance.

Checking and Managing Swap in CentOS

To see swap usage:

swapon --summaryTo disable swap (not recommended for most systems):

swapoff -a To re-enable it:

swapon -a # Enable all swap devices listed in /etc/fstabFor optimal performance, Linux allows tuning of swap behavior via swappiness, which controls how aggressively swap is used.

Check the current value:

cat /proc/sys/vm/swappinessA lower value (e.g., 10) makes the system prefer RAM, while a higher value (e.g., 60) makes it more likely to swap.

Change swappiness temporarily:

echo 10 > /proc/sys/vm/swappinessFor a permanent change, edit /etc/sysctl.conf:

vm.swappiness=10By understanding Linux memory management, you can optimize performance and stability for your system. 🚀