[System Design] Token Bucket Algorithm for Rate Limiting: From Single Server to Distributed Systems

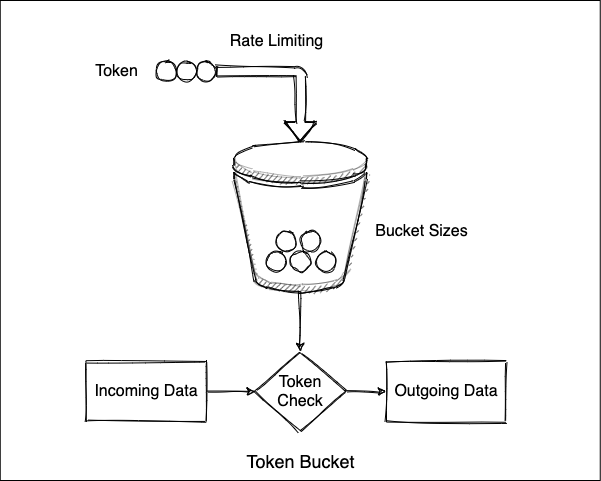

Rate limiting is an essential technique for protecting APIs and services from overuse, whether malicious or unintentional. Among the various rate limiting algorithms, the Token Bucket stands out for its simplicity and effectiveness.

Understanding the Token Bucket Algorithm

The Token Bucket algorithm uses a simple metaphor: imagine a bucket that holds tokens. Tokens are added to the bucket at a constant rate (the refill rate), and each incoming request consumes a token. If tokens are available, the request proceeds; otherwise, it's rejected or delayed.

Core Components:

Bucket: A container with a maximum capacity

Tokens: Units that represent permission to process requests

Refill Rate: How quickly new tokens are added (e.g., 4 tokens per second)

Implementation for a Single Server

Here's our Python implementation:

import time

class TokenBucket:

def __init__(self, refill_rate, bucket_capacity):

"""

Initialize the token bucket.

Args:

refill_rate: Number of tokens to add per second

bucket_capacity: Maximum number of tokens the bucket can hold

"""

self.refill_rate = refill_rate

self.bucket_capacity = bucket_capacity

self.current_tokens = bucket_capacity # Start with a full bucket

self.last_refill_time = time.time()

def allow_request(self, tokens_required=1):

"""

Check if a request can be allowed and consume tokens if possible.

Args:

tokens_required: Number of tokens required for this request

Returns:

Boolean indicating if the request is allowed

"""

self.refill()

if self.current_tokens >= tokens_required:

self.current_tokens -= tokens_required

return True

else:

return False

def refill(self):

"""

Refill the bucket based on elapsed time since last refill.

"""

current_time = time.time()

time_elapsed = current_time - self.last_refill_time

# Calculate tokens to add based on elapsed time

tokens_to_add = time_elapsed * self.refill_rate

# Update token count and cap at bucket capacity

self.current_tokens = min(self.bucket_capacity, self.current_tokens + tokens_to_add)

# Update last refill time

self.last_refill_time = current_time

Example: Single Server Scenario

Let's walk through a concrete example with:

Bucket capacity: 10 tokens

Refill rate: 4 tokens per second

Timeline of Events:

t = 0ms:

Initial state: Bucket has 10 tokens

Request arrives

Allow request, consume 1 token

Remaining tokens: 9

t = 300ms:

Time elapsed: 300ms

Tokens added: 4 tokens/sec × 0.3 sec = 1.2 tokens

Tokens before request: 9 + 1.2 = 10.2 tokens (capped at 10)

Request arrives

Allow request, consume 1 token

Remaining tokens: 9

This works perfectly for a single server, but what happens in a distributed environment?

The Distributed Challenge

In modern architectures, applications typically run across multiple servers or containers for scalability and reliability. This presents a challenge for rate limiting.

Problem: Independent Buckets

If each server maintains its own token bucket:

A system with 4 replicas would effectively allow 4 times the intended rate limit

Clients could bypass rate limits by distributing requests across different servers

The overall system would lack consistent enforcement of rate limits

Scenario with 4 Replicas (Independent Buckets)

If each of our 4 servers has its own bucket with capacity 10 and refill rate 4 tokens/sec:

Total system capacity: 40 tokens

Total system refill rate: 16 tokens/sec

This effectively quadruples our rate limit, which is unlikely to be the intended behavior.

Solutions for Distributed Rate Limiting

Centralized Token Storage

The most common solution is to use a centralized data store for token information:

Redis-Based Implementation:

Store token counts in Redis

Use atomic operations for incrementing/decrementing

Leverage Redis's time-to-live features for token refills

import time

import redis

class DistributedTokenBucket:

def __init__(self, redis_client, key_prefix, refill_rate, bucket_capacity):

self.redis = redis_client

self.key_prefix = key_prefix

self.refill_rate = refill_rate

self.bucket_capacity = bucket_capacity

def allow_request(self, identifier, tokens_required=1):

"""Check if a request is allowed for the given identifier"""

bucket_key = f"{self.key_prefix}:{identifier}:bucket"

last_update_key = f"{self.key_prefix}:{identifier}:last_update"

# Use Redis pipeline for atomic operations

pipe = self.redis.pipeline()

# Get current values or set defaults

pipe.get(bucket_key)

pipe.get(last_update_key)

result = pipe.execute()

current_tokens = float(result[0]) if result[0] else self.bucket_capacity

last_update = float(result[1]) if result[1] else time.time()

# Calculate refill

now = time.time()

time_elapsed = now - last_update

tokens_to_add = time_elapsed * self.refill_rate

# Update tokens

new_tokens = min(self.bucket_capacity, current_tokens + tokens_to_add)

# Check if we have enough tokens

if new_tokens < tokens_required:

return False

# Consume tokens atomically

new_tokens -= tokens_required

# Update Redis

pipe = self.redis.pipeline()

pipe.set(bucket_key, new_tokens)

pipe.set(last_update_key, now)

pipe.execute()

return True

Benefits of Centralized Approach

Consistent Enforcement: All servers reference the same token count

Precise Control: Global rate limits are accurately maintained

Flexibility: Can implement different limits for different users/resources

Considerations for Production Use

Performance: Redis operations add latency to each request

Reliability: Need to handle Redis failures gracefully

Scalability: For extremely high volume APIs, consider:

Sharding rate limits by user/resource

Using local caches with periodic synchronization

Implementing backoff strategies

Further reading resources

Conclusion

The Token Bucket algorithm provides an elegant solution for rate limiting, but requires additional consideration in distributed environments. By centralizing token storage with tools like Redis, we can maintain consistent rate limiting across any number of application instances.

Whether you're protecting your APIs from abuse, managing resource consumption, or ensuring fair usage across your user base, properly implemented distributed rate limiting is an essential component of modern system design.